¶ Introduction to the Internet's Transformative Impact

If you are listening to my words right now, then you are obviously an internet user. The Internet has arguably been the most transformative technology of the last 50 years. But it wasn't developed overnight or all at once. It was a gradual process to solve specific problems. and no one knew at the time that it would become the basis of a global network of computers. Learn more about the origins of the internet and how it was created on this episode of Everything Everywhere Daily.

¶ Sponsor Messages

This episode is sponsored by Stitch Fix. Gary, you're such a fashionable dresser. Said no one ever. I've always been, how shall I say this, a very simple dresser. However, I do acknowledge the need for having some clothes that make you look your best. And I also realize that I am probably not the best person to be making fashion choices. That is where Stitch Fix comes in.

I set up an account on Stitch Fix. I gave them all my size information and I also went through a series of onboarding questions to tell them what sort of clothes I like and what my budget is. For example, I'm not really big into floral prints or patterns. I'm more of a solid colors guy.

Once they have the information, a real human fashion consultant will pick out clothes for you and send a box. My most recent box had two button-up shirts, a short-sleeved t-shirt, a light sweater, and a pair of pants. Everything looked great and cost far less than had I gone and purchased it myself. Make style easy. Get started today at stitchfix.com slash everywhere. That's stitchfix.com slash everywhere.

Get started with the commerce platform made for entrepreneurs. Shopify is specially designed to help you start, run, and grow your business with easy, customizable themes that let you build your brand. Marketing tools that get your products out there. Integrated shipping solutions that actually save you time. From startups to scale-ups, online, in person, and on the go. Shopify is made for entrepreneurs. Sign up for your $1 a month trial at Shopify.com.

¶ Early Computing and the Need for Networking

Almost everyone in the world uses the internet in some form every day, yet most people have never considered where it came from or even how it works. There is an argument to be made that the internet, when taken as a whole, and all the information that it contains and all the communication that it facilitates, is the greatest thing ever built in the history of humanity.

But it didn't start that way. The internet had rather modest goals to begin with, even though a few visionary people knew from very early on just what potential it had. The computing landscape in the 1960s was radically different from what we know today. Computers were enormous, expensive, and rare, typically housed in government facilities, large research institutions, and major corporations.

They cost hundreds of thousands or even millions of dollars, and an entire university or department would often have to share a single computer. Early computers such as the IBM 7090 or the CDC 6600 filled entire rooms, required specialized staff to operate, and were often isolated from one another physically and functionally.

Access to a computer was precious and carefully scheduled. Users would write programs, often on punch cards, submit them to an operator, and wait hours or even days for results. Real-time interaction was virtually non-existent. Moreover, the software environment was highly localized. Each machine had its own operating system, and data formats were often proprietary.

As computers became more capable and universities, laboratories, and military installations invested in them, a problem began to emerge. Fragmentation and isolation. Institutions could not easily share data, collaborate on software development, or coordinate research efforts. Every organization was essentially an island. At the same time, by the early 1960s, the concept of timesharing started to take hold.

Time sharing allowed multiple users to interact with a computer at once by quickly switching between different tasks, opening the door to a more dynamic interactive mode of computing. This suggested a future in which computers could support communities of users, not just isolated programmers and hinted at the greater potential for networking.

There was also a growing strategic need to better use expensive computational resources, particularly within the United States government and military research agencies like ARPA, the Defense Department's Advanced Research Projects Agency. Different research centers often add complementary strengths. One might have better software tools, another more powerful hardware, and another specialized expertise.

Yet moving data or programs physically via magnetic tapes or printouts between sites was slow, inefficient, and unreliable. Remote access was clearly desirable. Researchers needed a way to share resources, exchange ideas, and collaborate without being physically present at the same site.

¶ Packet Switching: A Decentralized Communication Method

Around the same time, there was another problem that was being considered. In the 1960s, communications, like the telephone system, were largely based on circuit switching. In circuit switching, when two parties communicate, a dedicated physical circuit is open between them for the entire conversation. if that line were cut or damaged, say, by a nuclear bomb.

communications would immediately fail. Moreover, while the line was open, it could not be used by anyone else, even if neither party was speaking at that moment, which was an inefficient use of resources. Paul Barron, who worked at the Rand Corporation in the United States, and Donald Davis in the United Kingdom began asking, what if communication could be made more decentralized and dynamic? In 1964 Barron proposed a radical alternative.

Instead of fixed lines, messages could be broken into small pieces. Each piece could travel independently through whatever routes were available at the time, and the receiving system could then reassemble them. Each packet of data would carry not just its contents, but also a destination address. This approach had several theoretical advantages over circuit switching. The key advantages of this approach were, first, resilient.

If one path or node were destroyed, packets could be automatically routed through other working paths. The system didn't require a single fragile central hub. Second was efficiency. Since packets could share network paths with packets from many other users, bandwidth could be dynamically used instead of sitting idle, like a reserved phone line. Third was scalability. More users could be added without having to lay out an entirely new set of dedicated lines for each connection.

And finally, it was cheaper. Sharing common infrastructure lowered the overall cost compared to maintaining many individual dedicated circuits. Barron's idea envisioned a network with no single point of failure, which was extremely attractive to military planners worried about the survivability of command and control systems during a nuclear conflict.

Meanwhile, Davis, who coined the term packet, was motivated by the efficient use of expensive computer systems in civilian settings, such as time-sharing large mainframes among many users.

¶ ARPANET: Combining Ideas into Reality

Here, I should also mention the work of the visionary that I mentioned earlier, Joseph Carl Robnett Licklider. Licklider joined ARPA in 1962 and wrote a paper on something he called the Galactic Network. It was a visionary concept imagining a globally interconnected set of computers through which anyone, anywhere could quickly access data and programs from any site.

Licklider envisioned a network that would allow widespread information sharing, collaboration amongst distant researchers, and even real-time communication. In essence, an early sketch of what would later become the internet. The network ideas of Licklider and the packet switching ideas of Paul Barron were combined in an actual proposal known as ARPANET.

When ARPANET first launched in 1969, its initial networking protocol was called Network Control Protocol, or NCP, which had an early form of packet switching. To handle the packet switching, computers called interface message processors had to be installed in each location. These processors were about the size of a large refrigerator and were equivalent to the router you might have in your home.

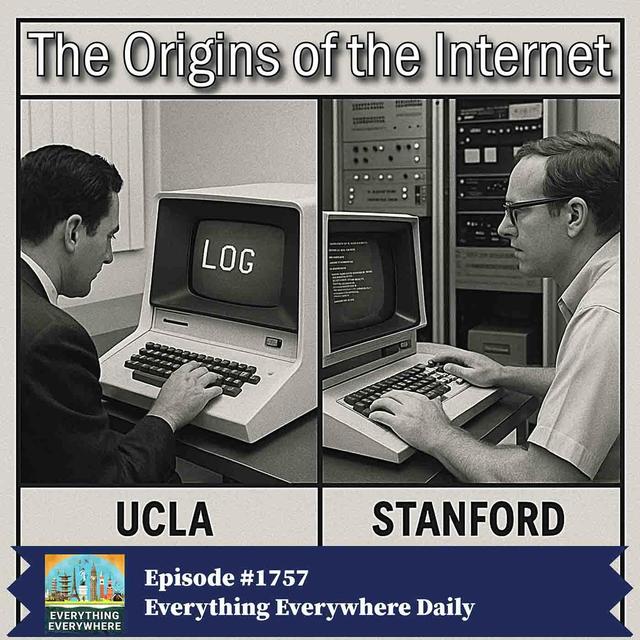

The first interface message processor was installed at UCLA in September 1969. The second was installed at the Stanford Research Institute in October. And once you had two nodes, you have a network. On October 29th, 1969, the first message was sent from UCLA to Stanford attempting to log in by typing the word login. However, the system crashed after receiving only the first two letters, LO. This humble beginning marked the first transmission on what would become the Internet.

By December, UC Santa Barbara and the University of Utah were also connected to the network.

¶ From NCP to TCP/IP: The Internet's Foundation

The network control protocol which ran ARPANET soon began to run into limitations in the early 1970s. The primary weakness of the network control protocol was that it was designed for communication within a single, relatively controlled network and could not handle the complexities of connecting multiple independent networks.

NCP assumed that the underlying packet switch network would reliably deliver data, so it did not include provisions for dealing with packet loss, retransmissions, or routing failures. which were many of the original reasons for having packet switching in the first place. Furthermore, NCP lacked a standardized system for addressing hosts across different networks, making it impossible to interconnect emerging packet networks such as satellite wireless and other local area networks.

As the number and diversity of computer networks grew in the 1970s, it became clear that NCP's limitations in flexibility, scalability, and fault tolerance made it inadequate for the future needs of networking. And as an aside, this proliferation of networks began being called the inter-network or the network of networks. And the inter-network was eventually shortened to internet.

In response to this problem, Robert Kahn, then at DARPA, proposed a new idea for open architecture networking in 1973. Instead of treating the network as a single homogeneous entity, the new system would treat each network as a black box with its own internal methods. This would ensure that packets could travel across different networks without modification.

Khan partnered with Vinton Cerf, who was working at Stanford University at the time, and together they began designing a new protocol to overcome NCP's weaknesses. Their new protocol was called Transmission Control Protocol or TCP. However, the protocol still had weaknesses. During development it became clear that splitting TCP into two distinct layers would provide greater flexibility.

This modular design allowed different kinds of applications, some needing reliability like file transfers, and other prioritizing speed like real-time voice communication to make different demands on the network without burdening the entire system. The first protocol is known as Internet Protocol, or IP. IP is responsible for addressing and routing. It ensures that packets of data know how to travel across networks to reach the correct destination.

Every device connected to a network using IP has an IP address. When data is sent, IP breaks it into smaller units called packets, each labeled with the source and destination IP addresses. IP then forwards these packets across various interconnected networks using routers to direct them towards their destination. However, IP itself is unreliable. It doesn't guarantee that packets arrive in order, arrive at all, or arrive uncorrupted.

Its job is simply to move packets from point A to point B as best it can, even if the path changes mid-journey. The second protocol, TCP, ensures reliable communication between two computers. It operates at a higher layer than IP and solves the problems that IP leaves open. TCP establishes a connection between the sender and the receiver before data transmission starts, a process called a handshake, and it ensures that packets arrive in the correct order and without errors.

If a packet is lost, duplicated, or arrives out of sequence, TCP detects this and retransmits packets or reorders them as necessary. TCP also manages flow control to avoid overwhelming a slow receiver with too much data at once, and congestion control to adjust the sending rate if the network becomes too busy. Together, these protocols became known as TCPIP. Although the protocols have been updated since then, they still form the basis for the entire internet today.

The split in protocols took place in 1978 and it was immediately obvious that TCPIP was the future and was going to replace NCP. After years of testing and refinement, the official switch from NCP to TCP IP on ARPANET was scheduled for January 1, 1983, known as Flag Day, when all nodes had to transition to the new standard. This event is often considered the real birth of the modern internet.

¶ Applications Built on TCP/IP and Conclusion

TCP IP, of course, is just the base layer of the internet. The average internet user has no clue what's happening at the lowest levels of the network. They're familiar with many of the things that have been built on top. Email became a popular application in the 1970s, but there was no standardized method for sending emails between networks. SMTP, or Simple Mail Transfer Protocol, was developed in the early 1980s to create a standardized way for computers to send email across networks.

FTP or File Transfer Protocol was one of the earliest application protocols designed for ARPANET with its first version specified in 1971. FTP was created to allow users to reliably transfer files between remote computers over the network, addressing the need for researchers to share software, documents, and datasets. It ran over NCP initially and was later adapted to TCP IP after the 1983 transition.

Usenet was created in 1979 by two graduate students, Tom Truscott and Jim Ellis at Duke University. They wanted to build a decentralized system for sharing messages and discussions amongst computer users. Inspired by the idea of ARPANET but without access to its restricted network, they designed Usenet to work over simple dial-up telephone connections using the Unix-to-Unix Copy Protocol, or UUCP.

The system allowed users to post and read messages in organized categories called newsgroups, effectively creating one of the world's first large-scale online communities. And of course, I haven't even mentioned the World Wide Web and Hypertext Transfer Protocol, or HTTP, which was the thing that really made the internet explode in popularity. But that story I will save for a later episode.

The computer scientists at UCLA and Stanford who made the first internet connection in 1969 could never have guessed that that simple act would be the start of a revolution that would change the world. for the better and for the worse.

¶ Credits and Listener Review

The executive producer of Everything Everywhere Daily is Charles Daniel. The associate producers are Austin Okun and Cameron Kiefer. Today's review comes from listener SSW Environ over on Apple Podcasts in the United States. They write,

Gary writes great episodes on topics you never thought you wanted to know about until you hear him speak of them. You will look at the title of an episode, such as The History of Salt, and wonder why you would like to listen. And then you listen and you wonder no more. fascinating topics will have you listening to multiple episodes when you may have only wanted to learn a single topic. This is my go-to for a long walk or run.

Well, thanks, SSW. I'm glad you enjoyed the episode on salt. Perhaps in the future I could do one on pepper, and then maybe an episode on salt and pepper. On second thought, I probably don't want to push it. Remember, if you leave a review or send me a boostagram, you too can have it right on the show.